- Scalable Distributed Monitoring System for HPC Clusters

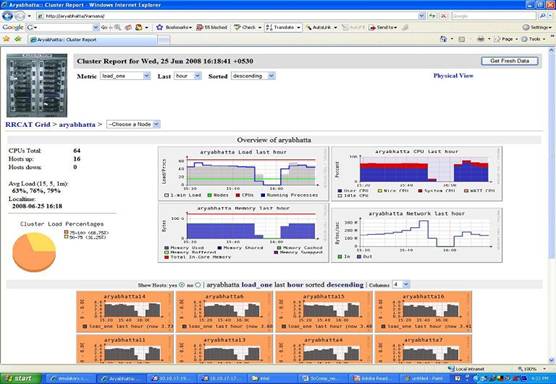

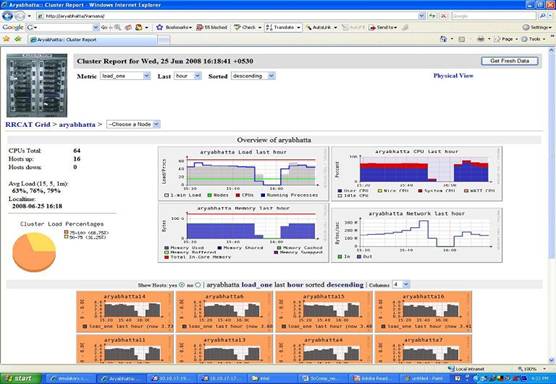

Web based scalable distributed monitoring system 'Ganglia' version 3.0.4 for High Performance Computing Systems has been configured and deployed on RRCATNet. Presently HPC cluster 'Aryabhatta' is configured under this monitoring system.

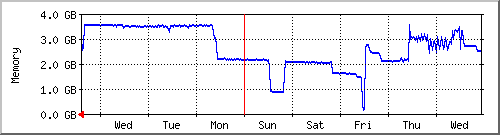

Consolidated cluster usage in terms of Load, CPU, Memory, Network is readily available in Ganglia. Also detailed usage of each node of cluster in terms of Load, CPU, Memory, Network, Disk, Packets in/out etc. is also available. All these details are available from last one hour to one year in graphical form.

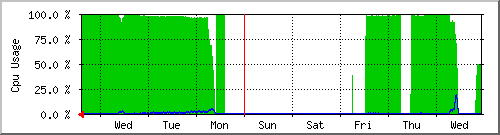

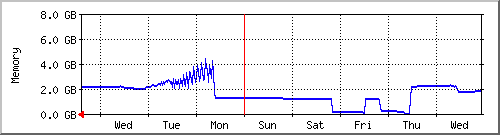

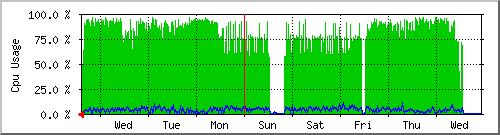

Output of 'Ganglia' showing Load, CPU, Memory and Network usage of Aryabhatta cluster

- Advanced Job Scheduling System for HPC cluster 'Aryabhatta'

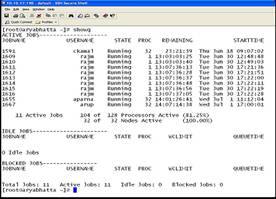

An advanced job scheduling system is devised and configured on High Performance Computing Cluster 'Aryabhatta' using 'Torque' and 'Maui'. HPC clusters are mainly used for parallel applications but all resources (CPU, Memory, Disk etc.) are not always fully occupied by parallel applications. The job scheduling systems of 'Aryabhatta' has been configured in such a way that sequential applications can run along with parallel applications, without degrading performance of parallel applications.

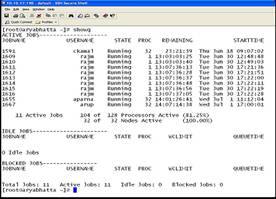

Queue of Advanced Job Scheduling System on Aryabhatta cluster

- High-available File Servers

File Servers of NIS based centralized computing setup have been upgraded to provide high speed data access along with high-availability feature. The access speed is doubled as compared to earlier setup. Two number of HP ML 370 G5 Xeon based Linux servers with RAID 5+0 are configured in hot-standby mode to provide high speed file access and data redundancy. Capacity of computing user's area is enhanced to 860 GB. This setup is built up mainly on four parts - running system accounting services for all computing servers in synchronized manner, saving daily system accounting data in file, creating active user list from accounting data of all computing servers and taking mirror copy from one file server to another. Perl scripts have been developed for the above tasks.

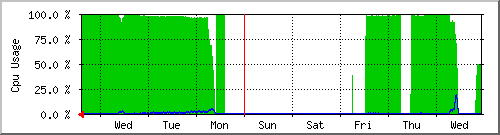

- Configuration of MRTG on all computing servers

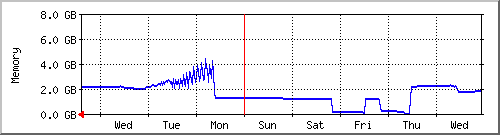

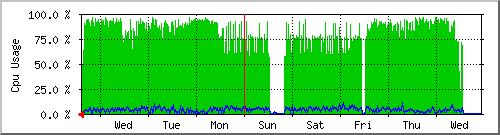

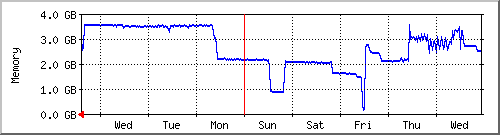

Multi Router Traffic Grapher (MRTG), an open source web based software has been configured for all computing servers to monitor the load of CPU, memory usage, hard disk usage and network traffic. The data is stored in round-robin database so that system storage footprint remains constant over time. The software records the data in a log in the client along with previously recorded data and creates the HTML document detailing the traffic for the devices such as CPU, memory and network in graphical form.

CPU and Memory usage of CHI (HP Alpha ES45) server

CPU and Memory usage of BETA (Intel Xeon 3.6 GHz) server

- Porting of Parallel programs

The parallel application software ADF (Amsterdam Density Functional, version 2006.01 - a Fortran program for calculations on atoms and molecules) and parallel software DDSCAT (To calculate scattering and absorption of electromagnetic waves by targets with arbitrary geometries and complex refractive index using discrete dipole approximation) and were successfully ported on 32-node Nalanda cluster. WIEN2K_08 (latest version) and ADF bundle with HPMPI (version 2007) has been successfully ported on 64-bit computing environment of Aryabhatta cluster. The application of ADF is successfully running through scheduler of 'Aryabhatta' cluster. Parallel application CPMD (Car-Parrinello Molecular Dynamics - Electronic Structure and Molecular Dynamics Program) has been successfully ported on 'Daksha' cluster of DAEGrid.

- Porting of Sequential programs

As per requirement of users, various software packages are successfully ported on Intel based computing servers and clusters. The programs successfully ported on Intel based servers are: SDDS simulation software (Self Describing Data Sets-a modular system for Accelerator Design, Simulation, Control and Analysis) and Flair (Fluka Advanced Interface) Energy Gain of Photoelectrons (computes the energy gain of an initially stationary electron due to the passage of a positively charged bunch, as a function of radius of the electron in the beam pipe), ECLOUD (simulates the build up of an electron cloud, which occurs due to photoemission and secondary emission inside an accelerator beam pipe during the passage of a narrowly spaced proton or positron bunch train).

CPMD (Car-Parrinello Molecular Dynamics - Electronic Structure and Molecular Dynamics Program) and WIEN97 are successfully ported on IBM Power 5+ RISC architecture.

- DAEGrid Portal for RRCAT

DAEGrid is operational between BARC, RRCAT, IGCAR & VECC to share computing resources. Each DAE unit requires grid-portal to access computing resources available on the grid. Genius based grid-portal on Scientific Linux has been commissioned.Users from RRCAT can submit their parallel and sequential applications on DAEGrid using Intel Fortran & C compilers, gcc, g77 compilers, Intel Math Kernel Library and MPICH.

- CORAL database copy tools for LHC

Under DAE-CERN collaboration, project was completed to develop a set of CORAL database copy tools for LHC users. The CoralTools package was designed and developed to provide a set of export tools for CORAL (Common Object Relational Access Layer) framework to simplify deployment of CORAL based applications. The package provides facility for copying either individual tables or complete schemas between existing databases and technologies. The tools support schema and data copy between Oracle, MySQL and SQLite relational databases.

The CoralTools package was developed in Python and it is an implementation of PyCoral interface developed using the Python C API. The set of CoralTools were tested and implemented on CERN servers. Programs were written for unit testing, integration testing and stress testing (performance analysis was carried out on databases containing upto 5,00,000 records). This module has been released to users in CERN.

- PyCORAL Software for LHC

Under DAE-CERN collaboration, a project was completed to develop python interface to CORAL (Common Object Relational Access Layer) APIs for LHC. PyCORAL is a complete module designed to provide CORAL functionality to python users. It allows LHC users to write programs in python and access relational storage (ORACLE, MySQL) facilities.

PyCoral is an extension module of python, developed using the python C API. It is a python interface to the CORAL package or in other words it provides CORAL equivalent functionalities to python programmers. This module has been released to users in CERN.

- LFC Database Lookup Service

Under DAE-CERN collaboration software for LFC based Database Lookup Service was developed. LFC (LCG File Catalogue) is a high performance file catalogue, which supports Oracle/ MySql as database backend to store the logical and physical mappings of files. Prototype for LFCLookupService, a plugin library responsible for logical-physical mapping and for providing the list of possible replicas based on logical service name, authentication method and access mode was developed. A set of LFCDbLookupService administrating tools API were developed for the management of mapping information stored in LFC. These command line tools were implemented using LFC APIs for adding, removing, listing the replica entries for specified logical connection string and to export the replica entries of a logical connection string into XML file.

Unit testing and Integration testing programs were written for testing the connection and authentication service and running the test for lookup of replicas from LFC Server. Performance analysis was also carried out for lookup time vs server load.

- Parallelization of program for calculation of optics parameters for the off-momentum particles matching of parameters in the lattice for Indus-2

Parallelization of program was done, which performs various tasks for the lattice (Linear), such as amplification factors for cod, beta-beat, tune shift scanning of quadruple, obtaining the stable area of quad and calculation of the optics parameters for the off-momentum particles matching of parameters in the lattice.

Original sequential program was written by scientists working in Beam Diagnostics Section. Parallel version of program was tested with various input data sets. Time required to complete the job when executed reduces approximately by a factor of number of nodes used for computation when used in parallel mode. The parallel version of software was implemented on 8-node cluster.

- Porting of Beta Software for Beam Dynamics Section

This GUI based software was ported, configured and made available on the network to Beam Dynamics Section. It facilitates calculation of beam parameters like beta function, betatron tune, dispersion etc. for an electron storage ring.

- Parallelization of Computation of Hyperpolarizabilities of a given quantum system

Parallelization of program for computation of the second order hyperpolarizabilities (Beta) of a quantum system was done. The original sequential program was written by a scientist working in Solid State Laser Physics division. The calculation of second order hyperpolarizabilities of a given quantum system is a computationally demanding task which consume hours and even days of processing time. Parallelization was done by incorporating MPI library calls. Sequential program used to take 13 days to produce final results. After parallelization it takes only 2.3 days for producing final results on 8-node cluster.

- Parallelization of Computation of Magnetic Field in Wiggler Magnet in 3D space program

Parallelization of a sequential program wig12 was done, which was developed as a tool to design air core super-conducting Wiggler magnets. Simulation program gives more accurate results if we take more points at which field calculations are done. As we increase number of points, computations start taking long time. Parallelization of sequential program was done using MPI library calls on 8-node cluster. When we executed sequential program on cluster, it took 7 minutes 42 seconds for number of points = 16 in X direction, 10 in Y direction and 10 in z direction for field calculation. When parallel program was executed, it gavs results in 59 seconds on 8-node cluster.

- Software for POOL-RDBMS backend for LHC Computing Grid Project at CERN

Development of a prototype RDBMS Component for the POOL persistency framework was done under DAE-CERN software collaboration. This software was developed as a component of the POOL project. The POOL project aims to implement a common persistency framework for the LHC experiment data. Enormous data (Petabytes) needs to be stored as objects in the database. This software acting as RDBMS backend, stores and retrieves objects from relational databases. It was developed in C++ as a set of service APIs.

The work included the design of the prototype as plug-in in the POOL framework, finding out a solution for remote database connectivity, implementation of various interfaces of the POOL storage manager for the Relational back ends and finally testing the cross technology referencing concept of the POOL storage manager.

- Scientific Library project for CERN

This project was done under DAE-CERN collaboration. The work involved analysis and comparison of two major scientific libraries available in the market - Nag C and GSL. It involved development of some routines in C language. The routines were tested and deployed.

- Software for instrumentation setup for training of magnets

An instrumentation setup was prepared at RRCAT for training of super-conducting magnets. The instrumentation setup needed to be controlled by a PC. DASYLAB 4.0 on windows' 95 was used to develop the application for data acquisition. Microsoft Excel sheet was used for analysis purposes. Apache, PHP, JAVA and SWING API's were used to develop the front-end and backend for data management. Following software controls were provided in the acquisition application:

- For detection of the quenched coil

- For ramp control of the output current to the magnets

- For recording of the current values near the quench point

- For automatically shutting off of the power supply as soon as the quench occurs

- Control to switch on the power supply

- Control to reset the power supply

- Porting of Electron Gamma Shower package

This software was ported on Linux based server and made available to Alignment Health Physics and QA Section. This is a GUI based software for generation of Electron Gamma Shower in any material.

- Wiggler Magnet Visualization Software

The aim of this software was to generate geometrical parameters of the wiggler magnets so that they are not overlapping each other and well placed in different planes, thus they will generate desired magnetic field after running current through them. The software provides user-friendly interface for taking coil parameter as input for generation of coil geometry in XY and XZ plane. If user finds placement acceptable, then inserted coil parameters can be written in the file, which is taken as input file for simulation program. This software is developed in Visual Basic on Windows platform.

- Interactive Analysis and Visualization software for Volkswagen project data sets

Under Volkswagen project, various data sets are generated, which hold the coordinates of lattices. User friendly and interactive software was required for data modeling & analysis of available data sets. This software is for modeling and analysis of molecular parameters i.e. lattice coordinates. This software is developed in Visual Basic on Windows platform.

- Software for Harmonic Coil Measurement

This Software was developed for Harmonic Coil Measurement System, which was used for aligning the magnets, measurement and analysis of magnets. Routines were developed for carrying out FFT using single coil and multiple coils, processing the results of FFT for determining correction factors to align the magnets, determining integrated gradient, gradient error, storage & retrieval of data files, displaying encoder signal and different harmonics. This software was written on Java platform.

- Fax based Tender Receiving System

This software package was developed for receiving tenders over Fax with proper security arrangements and implemented at IRPSU (Indore Regional Purchase and Stores Unit). As quotations are secret documents, an image pattern is printed on enquiry forms which contains due date of particular tender. As soon as any fax massage is received, the program searches for due date pattern in the received image file and if it is not overdue, the message is encrypted. If it is an overdue tender, the LATE OFFER patch is automatically superimposed on the message and message gets printed. Unless and until passwords from purchase and accounts department are entered correctly and due date is over, the fax messages are not decrypted. Normal fax messages are printed as soon as they are received. This package has been developed in C on Linux platform.

- PVM for BEAM Dynamics

A parallel software CYTRACK was developed for tracking of ions in an AVF-SF Isochronous Cyclotron. Ions are tracked from injection to extraction, in the RF-electric and dipole magnetic fields used in the AVF-SF Isochronous Cyclotron. Ions are tracked from injection to extraction, in the RF-electric and dipole magnetic fields used in the AVF-SF cyclotron. The tracking involves Runge-Kutta integration of Lorentz' equation of motion of ions under isochronous acceleration. The program generates the magnetic field pattern for successive isochronous acceleration in every orbit, by iteration. All these calculations take a long run time. Hence the software has been parallelized under PVM and the runtime has been reduced to one third (as compared to sequential version) by distributing the computational load over three machines.

- Graphics Library

The Calcomp Graphics Library consists of several modules in Fortran-77/ 90 to plot graphs/ curves. This software runs under Unix environment with gr85 terminal emulation. Since most of the Ethernet cards and TCP/IP software support vt100 emulation, it was impossible to use this software for graphics on RRCATNet. A software interface on top of X windows has been developed in C. These C modules can be called from Fortran-77 on any Unix machine running X-Windows. The Fortan software developed using X-Windows is GUI based and it supports network based client/ server model.